Google Research: Geospatial Reasoning

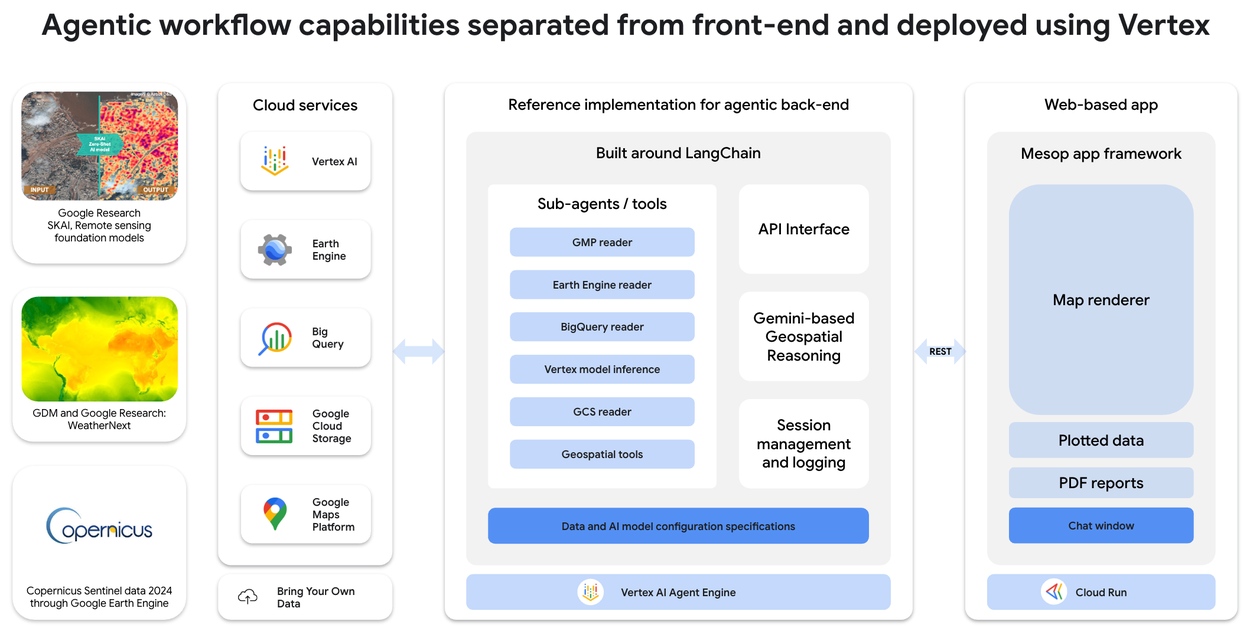

As we know, Google put vast efforts in mapping and understanding geospatial information for many years: Google Maps, StreetView, Google Earth, Google Trends, Weather Forecast on Search. To derive actionable insights, at Google Cloud Next 25, Google Research team introduces Geospatial Reasoning framework that uses Gemini’s reasoning ability – along with inference from multiple geospatial foundation models – to provide rapid, trustworthy answers to complex geospatial problems, I believe lots of physical world problems can benefit from extending, it allow developers, data analysts, and scientists to integrate its foundation models with organization’s own models and datasets to build agentic workflows.

Overview

Here is overview of Geospatial Reasoning

(Video source: Google Research)

Demonstrating app

Watching the video of demonstrating app built with Geospatial Reasoning framework, application behaves like how a crisis manager responds to the aftermath of a hurricane:

- Visualize the pre-disaster context in open-source satellite imagery using Earth Engine.

- Visualize the post-disaster situation by importing their own or externally sourced high-resolution aerial imagery.

- Identify areas where buildings have been damaged or flooding has occurred, with inference grounded in analysis of aerial images by Remote sensing foundation models.

- Call the WeatherNext AI weather forecast to predict where risk of further damage is likely.

- Ask Gemini questions about the extent of the damage, including:

- Estimating the fraction of buildings damaged per neighborhood.

- Estimating the dollar value of property damage, based on census data.

- Suggesting how to prioritize relief efforts, based on the social vulnerability index.

(Video source: Google Research)

(Image source: Google Research)

(Image source: Google Research)

Agentic workflow

(Image source: Google Research)

(Image source: Google Research)

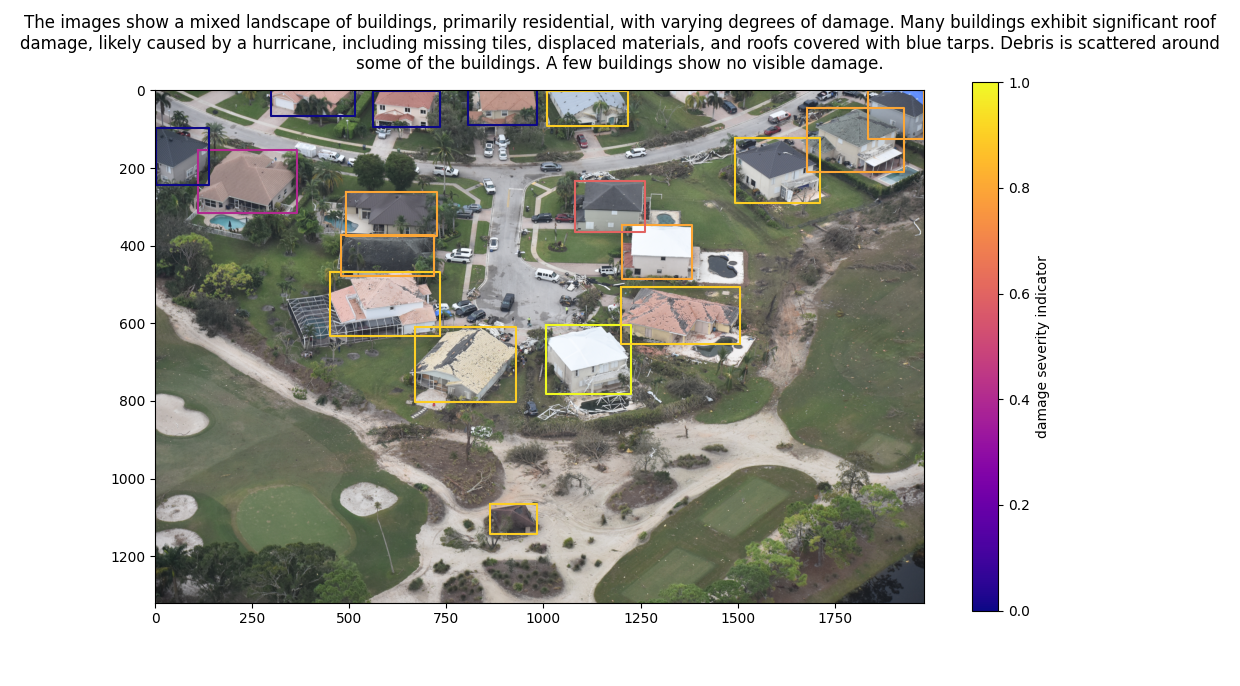

Agentic workflow initiated by Geospatial Reasoning to the demonstrating app is as followed, thus it illustrates how the post-hurricane damage assessment completes:

- A high resolution image for the area of interest is retrieved from Google Cloud Storage

- Gemini then calls the specialized OWL-ViT: Simple Open-Vocabulary Object Detection with Vision Transformers remote sensing model for object detection using a prompt such as, “Find the buildings in this image.”

- The response is a list of bounding box coordinates (box boundaries added for visual comprehension)

- Image patches are then sent to Gemini or a task-specific captioning model for building damage assessment and the responses are summarised. The final result is illustrated in the image.

Potential opportunities

The potential applications inspired by it are vast:

- disaster response coordination

- smarter urban planning

- public health initiatives

- logistics, site selection, and demand forecasting

- traveling